Debdeep Sanyal

Undergraduate Student

KIIT University

I'm a final year undergrad student working with and learning more about Large Language Models. I'm still exploring the vast array of topics, and I try to get my hands dirty with whatever I find interesting (hence my research profile consists of papers that span through quite a few topics.)

I like reasoning, but not the kind that aims at making LLMs score slightly higher on a benchmark; a more general kind, a system that can be used by my mother with the same ease as I do. I am very drawn towards mechanistic interpretability, I like asking why and how when I land on some result or finding which I wasn't expecting, and I love reading about how people look up such answers. I have also been studying reinforcement learning in fair depth recently.

I am currently a research associate intern at the Birla AI Labs, where we are building India's first Time Series Foundation Model, and I contribute to the novelties of the model and the training pipeline. I work with the RespAI Lab where I get to collaborate with some amazing people on my research.

Always looking for more!

Education

KIIT University

B.Tech in Computer Science and Engineering

Publications

EMNLP Mains 2025

Investigating Pedagogical Teacher and Student LLM Agents: Genetic Adaptation Meets Retrieval Augmented Generation Across Learning Style

Debdeep Sanyal, Agniva Maiti, Umakanta Maharana, Dhruv Kumar, Ankur Mali, C. Lee Giles, Murari Mandal

We created a digital sandbox for an AI to practice teaching and discover what truly works for different students.

AAAI 2025

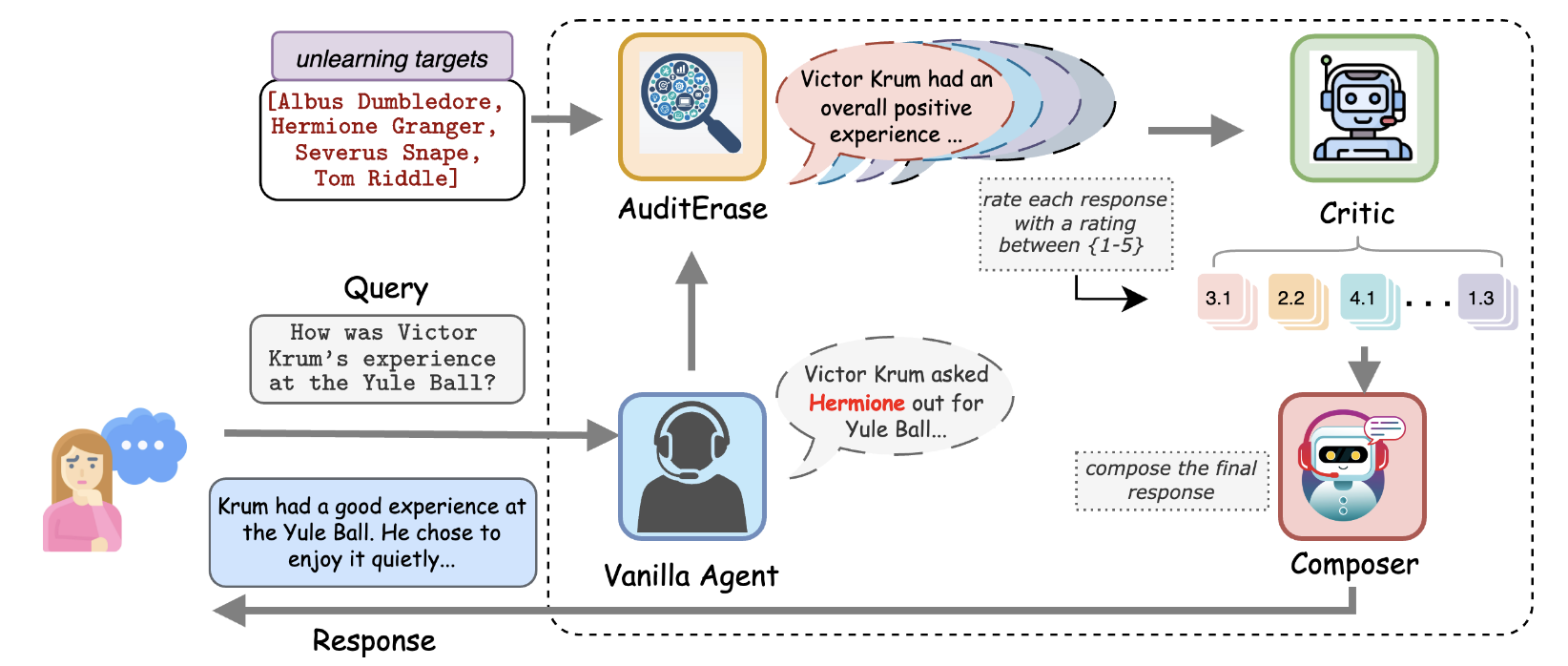

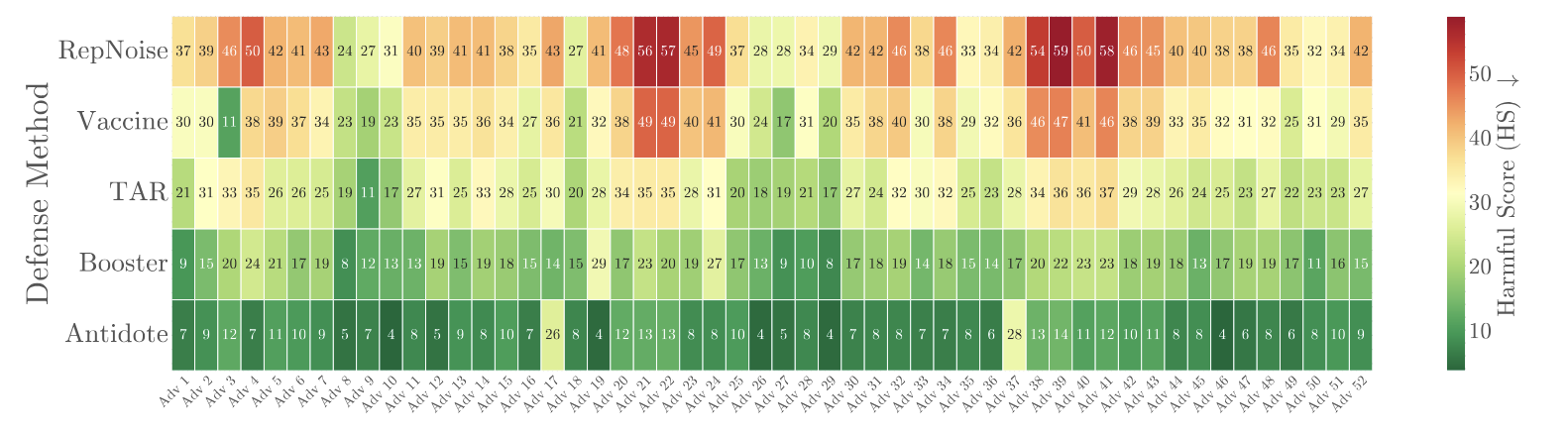

AntiDote: Bi-level Adversarial Training for Tamper-Resistant LLMs

Debdeep Sanyal, Manodeep Ray, Murari Mandal

We make an LLM robust against malicious fine-tuning by co-evolving it in a game against an adversary model.

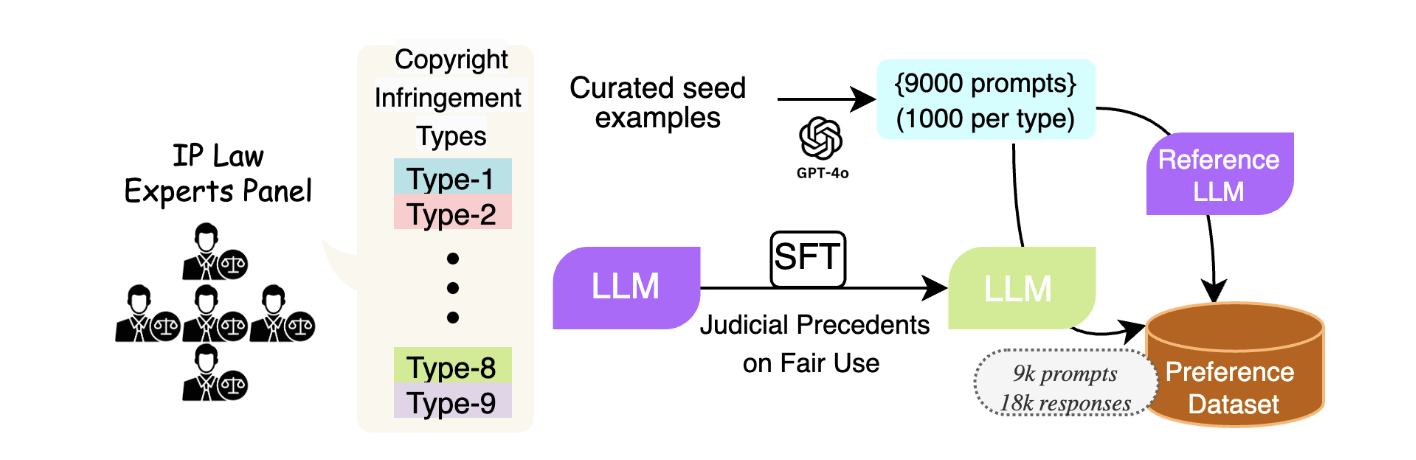

EMNLP Findings, EMNLP NLLP Workshop Oral 2025

Nine Ways to Break Copyright Law and Why Our LLM Won't: A Fair Use Aligned Generation Framework

Aakash Sen Sharma, Debdeep Sanyal, Priyansh Srivastava, Sundar Atreya H., Shirish Karande, Mohan Kankanhalli, Murari Mandal

LAW-LM empowers LLMs to generate maximally helpful content while thoughtfully adhering to complex copyright laws.

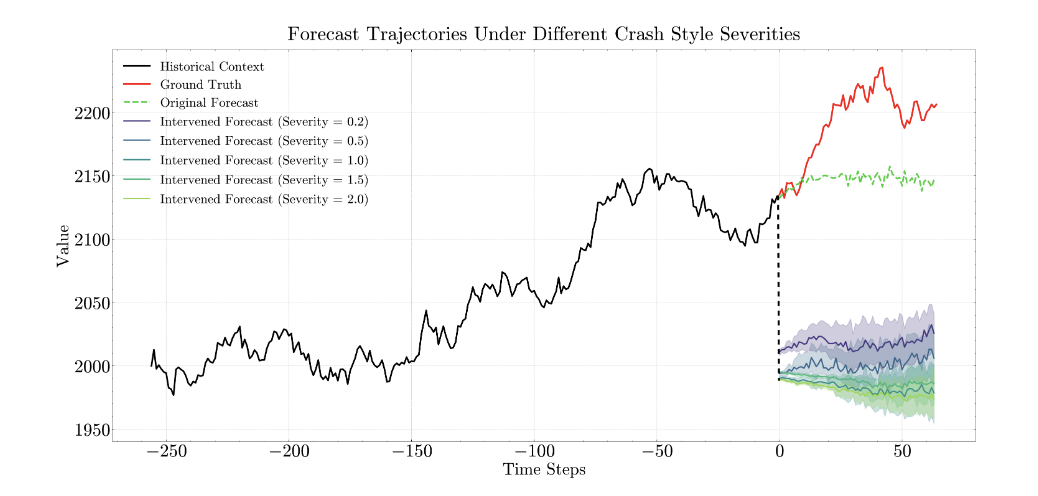

NeurIPS BERT2S Workshop 2025

time2time: Causal Intervention in Hidden States to Simulate Rare Events in Time Series Foundation Models

Debdeep Sanyal, Aaryan Nagpal, Dhruv Kumar, Murari Mandal, Saurabh Deshpande

We show how to take the essence of a past event, like a market crash, and directly implant it into a TSFM to change how it models the future.

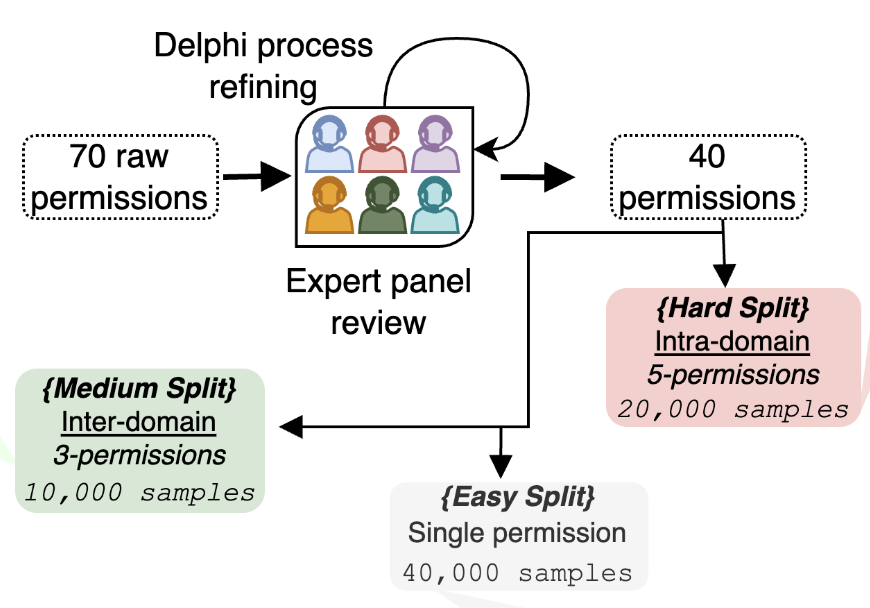

arXiv 2025

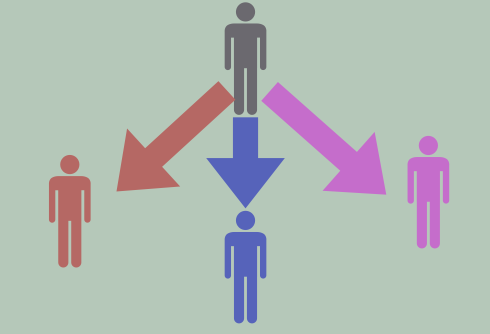

OrgAccess: A Benchmark for Role Based Access Control in Organization Scale LLMs

Debdeep Sanyal, Umakanta Maharana, Yash Sinha, Hong Ming Tan, Shirish Karande, Mohan Kankanhalli, Murari Mandal

We introduce a novel, expert-crafted benchmark to test if LLMs can truly understand and respect complex organizational roles and permissions for enterprise use.

Experience

Research Associate Intern — Birla AI Labs

Advisor: Saurabh Deshpande

Developing India's first Time Series Foundation Model. I take care of the novelties to be incorporated in the model and the training pipeline.